This page offers tutorials, resources, and hands-on lessons on Kalman filters, sensor fusion, and advanced estimation techniques like unscented and cubature kalman filters.

Posts

-

Unscented Kalman Filter

For the state estimation of nonlinear systems, shortly after Rudolf Kalman’s original publication, the Extended Kalman Filter (EKF) was developed to extend the Kalman filter to nonlinear systems. In the EKF, the nonlinear system is linearized around the current state estimate, which requires analytical derivation of the system equations. For many years, this approach was the standard solution for nonlinear systems, which are common in practice.

-

Stop using Extended Kalman Filter and Use Instead ...

TL;DR: The Extended Kalman Filter (EKF) often underestimates uncertainty on nonlinear systems, can become inconsistent or even diverge, is brittle to modeling/tuning errors, and struggles with constraints and multi-modal posteriors. Modern alternatives—Unscented/σ-point filters, smoothing/factor-graph methods, particle filters, or optimization-based Moving Horizon Estimation—are usually safer and more accurate.

-

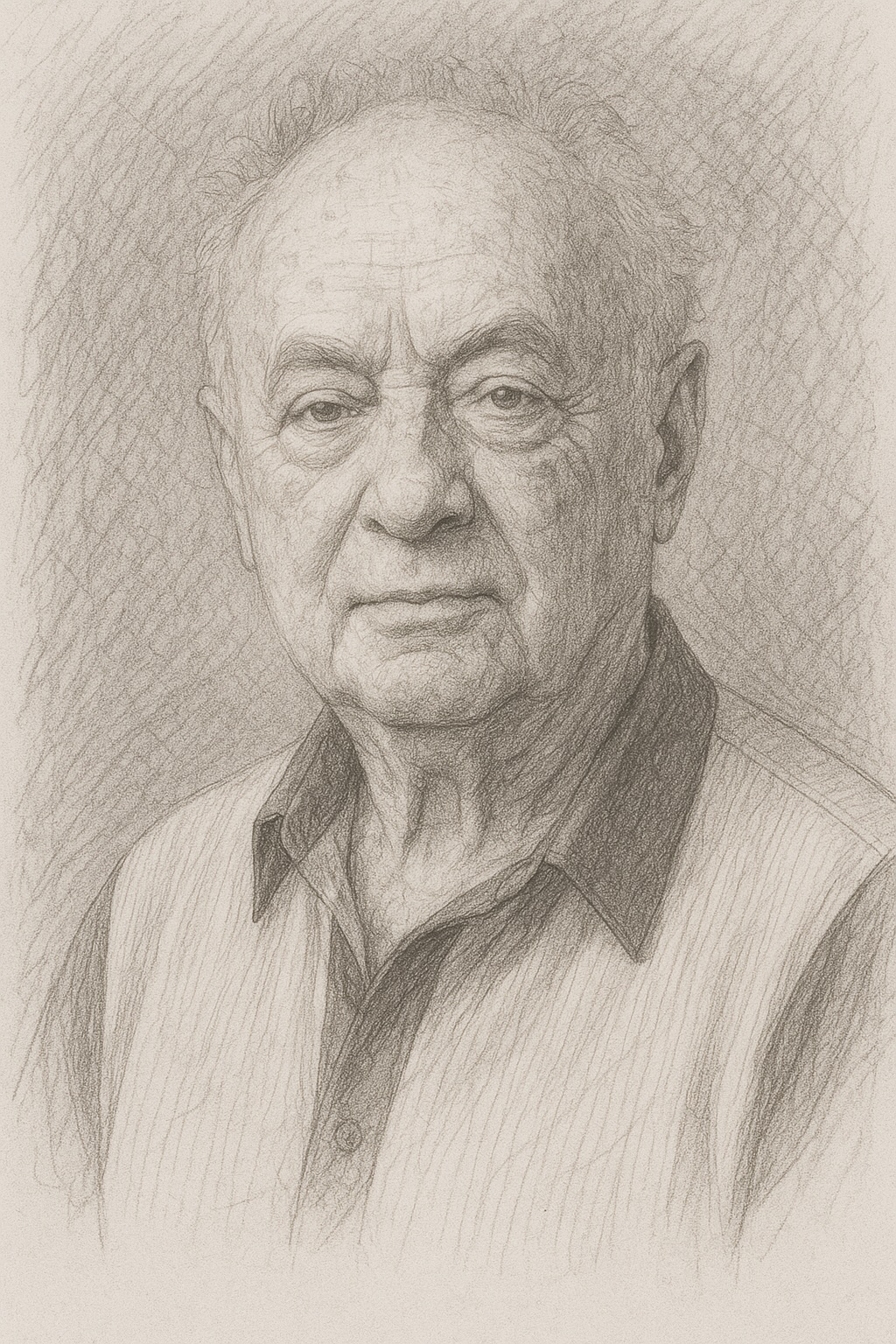

Yaakov Bar-Shalom

Yaakov Bar-Shalom (born May 11, 1941) is an Israeli-American electrical engineer and academic renowned for his pioneering contributions to the fields of target tracking, estimation, and sensor fusion.

Yaakov Bar-Shalom was born in Romania, where, as a teenager, he developed an early interest in mathematics and science, inspired by his high school math teacher and later by a professor at the Polytechnic Institute in Bucharest. At age 19, he and his family emigrated to Israel. Despite the challenges of adapting to a new country and language, he quickly excelled, graduating cum laude with a Bachelor's and subsequently a Master's degree in Electrical Engineering from the Technion in Haifa, Israel. He then moved to the United States, earning his Ph.D. in Electrical Engineering from Princeton University under the supervision of Stuart Schwartz. -

Failure Modes

Kalman filters are powerful tools for state estimation, blending predictions with noisy measurements to produce optimal system state estimates. Despite their utility, Kalman filters can exhibit various failure modes if certain conditions or assumptions aren’t properly managed. Understanding these failure modes is crucial to ensuring robustness and reliability in implementations.

-

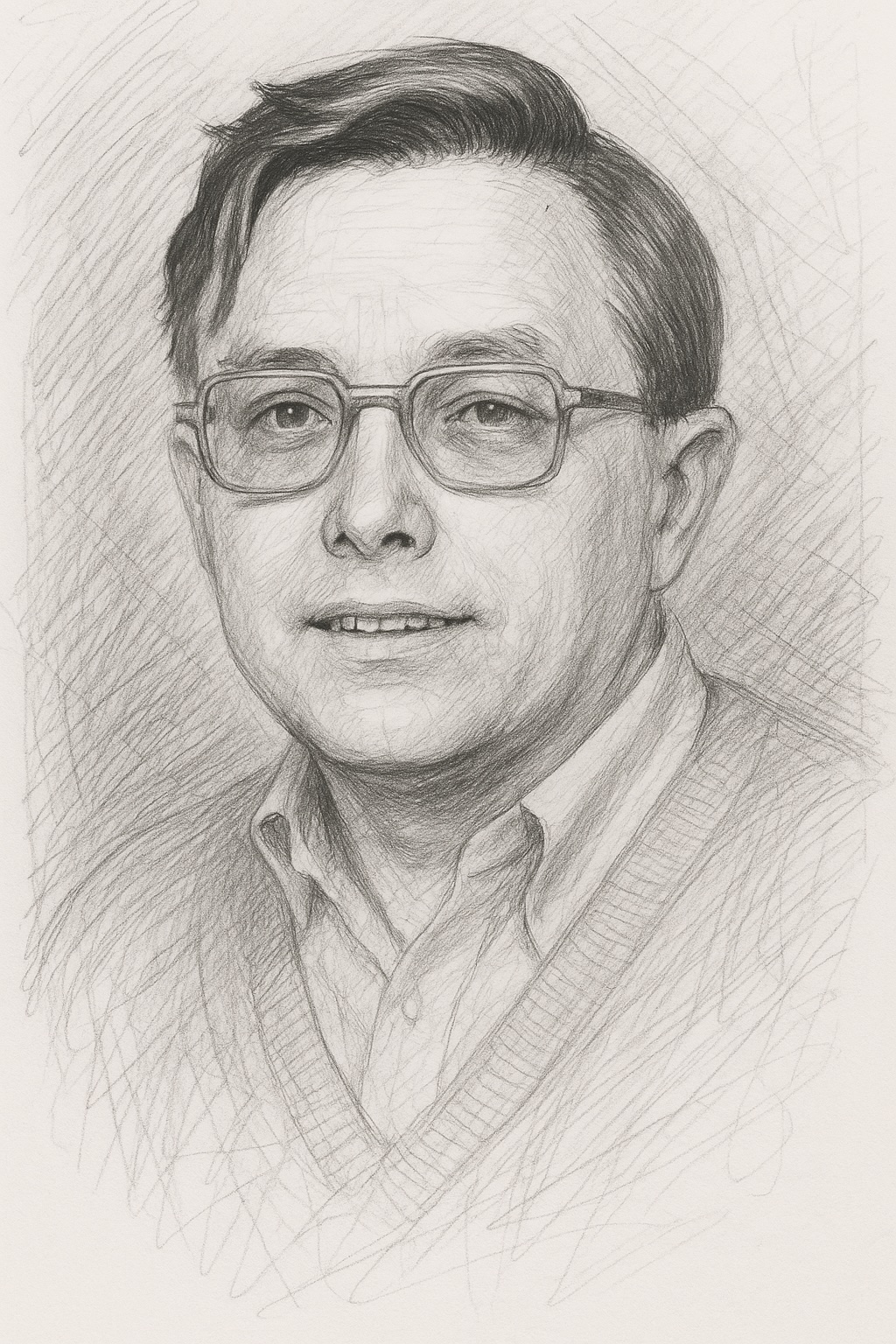

Richard S. Bucy

Richard Snowden Bucy (July 20, 1935 – 2019) was a prominent American mathematician and engineering scientist, celebrated for his foundational role in the development of modern control theory and signal processing. He is best known as the co-inventor of the Kalman–Bucy filter, a cornerstone algorithm in the estimation of dynamic systems, which has had transformative impacts across aerospace engineering, robotics, and autonomous navigation.

Richard S. Bucy was born on July 20, 1935. While details about his early education remain limited, his later academic accomplishments and affiliations reflect a rigorous background in mathematics, physics, and engineering disciplines—fields in which he would later leave a lasting legacy. -

Joseph form

One of the biggest challenges in implementing Kalman filters is that numerical errors can cause the covariances to lose symmetry and positive definiteness, causing the filter to diverge and no longer provide an estimation result. This happens especially in system models with large degrees of freedom. The solution is to use a numerically more stable equation. For the update equation this is the so-called joseph form, which has a higher computation time, but is less sensitive to numerical errors.

-

Root Mean Square Error (RMSE)

To evaluate the performance of state estimators (e.g., the Kalman filter), the estimation error \( \tilde{\mathbf{x}}(k) = \mathbf{x}(k) - \mathbf{\hat{x}}(k) \) is evaluated. The root mean square error (RMSE), which is a widely used quality measure, is suitable for this purpose. The basis is the estimation error \(\tilde{\mathbf{x}}(k)\) for each time step \( k \in {1…K} \).

In simulation

For a simulation with a length of \( K\) time increments the RMSE is averaged by \( N\) Monte Carlo runs in order to achieve a high statistical significance \[ \text{RMSE}(\tilde{\mathbf{x}}(k)) = \sqrt{\frac{1}{N} \sum^N_{i=1} (\tilde{x}^i_1(k)^2 + … + \tilde{x}^i_n(k)^2)} \] where \( n = \text{dim}(\tilde{\mathbf{x}}(k)) \) is.

-

Normalized Estimation Error Squared (NEES)

A desired property of a state estimator (e.g., the Kalman filter) is the ability to correctly indicate the quality of its estimate. This ability is called consistency of a state estimator and has a direct impact on the estimation error \( \tilde{\mathbf{x}}(k) \). In other words, an inconsistent state estimator does not provide the optimal result. A state estimator should be able to indicate the quality of the estimate correctly, because an increase in sample size leads to a growth in information content and the state estimate \( \hat{\mathbf{x}}(k) \) shall be as close as possible to the true state \( \mathbf{x}(k) \). This results from the requirement that a state estimator shall be unbiased. Mathematically speaking, this is expressed by the expected value of the estimation error \( \tilde{\mathbf{x}}(k) \) being zero \[ E [ \mathbf{x}(k) - \hat{\mathbf{x}}(k) ] = E [ \tilde{\mathbf{x}}(k) ] \overset{!}{=} 0 \]

-

Estimating the state of a dc motor

This example will focus on estimating the angular position \( \theta \), angular velocity \( \dot{\theta} \) and armature current \( i \) of a DC motor with a linear Kalman filter. When modeling DC motors, it is important to mention that certainly nonlinear models are superior to linear ones. For didactic purposes, the following widely used linear model is sufficient for the time being.

-

Multiple Model State Estimation

Central for the performance of a model-based state estimator is how well the model corresponds to the process to be observed. In practice, the question arises whether the process to be estimated always behaves exactly according to one model or whether the process changes between different models. The solution is to take this into account by using multiple models. In the literature this aspect is called multiple model. The challenge is both how exactly, i.e. only one model or the mixture of several models, and according to which rules the process changes into the different models.

-

Normalized Innovation Squared (NIS)

The Normalized Innovation Squared (NIS) metric allows to check whether the Kalman filter is consistent with the measurement residual \( \nu (k) \) and the associated innovation covariance matrix \( \mathbf{S}(k) \).

-

What is a Kalman-Filter?

-

What is a Kalman-Filter?

A Kalman filter is a powerful algorithm used in statistics and control theory for estimating the state of a system from a series of noisy measurements.

-

How to tune a Kalman-Filter?

Tuning a Kalman filter involves adjusting its parameters to optimize performance, specifically the process and measurement noise covariances. Here are some key points and methods for tuning a Kalman filter:

-

Pairs trading

A Kalman filter can be used in a trading strategy that is known as “Pairs Trading” or “Statistical Arbitrage Trading”. Pairs trading aims to capitalize on the mean-reverting tendencies of a specific portfolio. The foundational assumption of this strategy is that the spread of co-integrated instruments is inherently mean-reverting. Therefore, significant deviations from the mean are viewed as potential opportunities for arbitrage.

-

Cubature Kalman Filter

The Cubature Kalman Filter (CKF) is the newest representative of the sigma-point methods. The selection of sigma points in the CKF is slightly different from the Unscented Kalman Filter (UKF) and is based on the Cubature rule which was derived by Arasaratnam and Haykin [1]. As for the UKF, the CKF follows the idea that it is easier to approximate a probability function than to linearize a nonlinear function.

-

Discretization of linear state-space by various approaches

In practice, the discretization of the state-space equations is of particular importance.

subscribe via RSS